In this post we apply this approach to classify documents, and we do a comparison between this approach and the canonical bag of words.

The comparison test will be done through the ensemble method already showed in the last post.

Features

The idea is to build the features set considering constructs of co-occurrences instead of single words as usually done with bag of words.

- The prominent advantage of this approach is well rendered when the corpus contains structured features as for instance "bank account, invoice number" and so on.

- The biggest disadvantage lays in the length of the feature set and consequently in the length of the vectors built to represent each document.

Let me clarify the last point: consider for instance a corpus of 100 words; in this case the dimension of each vectors will be less or equal to 100. Let's try now to consider the co-occurrences of these 100 words, and just to simplify the process, let's focus on the co-occurrences of two words (so we consider the closeness of two words), in this case the length of the vector is 100*100 =10000!!.

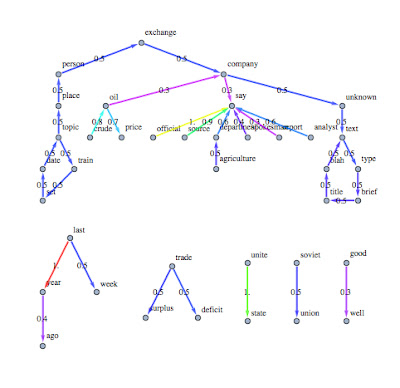

The image below represents the graph of the co-occurrences extracted considered the highest ranking (the ranking has been assigned in the way described in this post applied to the data set used to explain the ensemble method based on ADA boost M1).

|

| The most important features extracted through the LSA method. |

Vectors

To build the vectors I used the first 15000 structured features extracted by the latent semantic method and I considered just features composed by 2 words (co-occurrences).

To mitigate the complexity to crunch vectors with dimension so high, I built the training and test set using sparse matrixes of boolean values.

The advantage of the sparse matrix working with SVM is related to the small memory consumption required to select the support vectors.

Someone could argue that inner product of sparse array requires additional effort in the kernel evaluation, but if the vectors contain only boolean values, this extra complexity is negligible.

Comparison

To perform a comparison test, I used the same sets and the same strategies of learning.

In both scenarios, I trained the system through the ensemble techniques described in the last post (based on the boos method of ADA boost M1).

Results:

Before to discuss the results let me show the confusion matrixes.

|

| Confusion Matrix for LSA based classifier. (How to read it?...look at the former post) |

|

| Confusion Matrix for "Bag of Words" based classifier. (How to read it?...look at the former post) |

|

| In blue it has been represented the results for LSA based approach. In red it has been represented the results for Bag of Words approach. |

As you can see, even if the results obtained with the LSA classifier are not so bad, the traditional approach based on bag of words (in this experiment extracted through TD-DF) returns better results.

Why?

The training set used generated around 8.000 features (single words) and the vectors have been built using 500 features (6.25% of the total).

The size of the features having relevant ranking in the LSA approach is around 15000, and to ensure the same conditions of the test I trained the system choosing again 500 features (3.33% of the total).

Basically the useful information to classify the docs in LSA approach is spread over a bigger feature set.

Conclusion

As we have already seen in the past, doesn't exist a technique that perform better than other approaches with every data set. With this data set (a sub set of the Reuters 21578) the LSA requires more physical resources and returns worse results.

If we scratch the surface of the results, we can observe that it detects different aspects of the corpus to classify the docs: for instance it performs better with "GRAIN" category. So I wonder if we can take advantage of this aspect to ensemble this approach with the bag of words to improve further the accuracy (as suggested by a follower of this blog) !

...We will see the results of this conjecture the next post.

As usual: Stay Tuned!!

Cristian

Conclusion

As we have already seen in the past, doesn't exist a technique that perform better than other approaches with every data set. With this data set (a sub set of the Reuters 21578) the LSA requires more physical resources and returns worse results.

If we scratch the surface of the results, we can observe that it detects different aspects of the corpus to classify the docs: for instance it performs better with "GRAIN" category. So I wonder if we can take advantage of this aspect to ensemble this approach with the bag of words to improve further the accuracy (as suggested by a follower of this blog) !

...We will see the results of this conjecture the next post.

As usual: Stay Tuned!!

Cristian

Very interesting. Here's something that may be related that I have tried to simulate in past musings, but lacked time to pursue. I believe that your linkage of coincident words might make this a potentially practical approach.

ReplyDeleteBase thought is that static semantic ontologies are "trustworthy" and good for finding linked events through the standard triplet construction. The SVG/bag of words/ your new method have the advantage of growth and adaptive performance when new documents are added at a future time. My thought was to be able to use the adaptive properties of the growing linkage structures (ref. your diagrams) with constraint to have these fit into the triplet constructs of an existing ontology. The constraint imposed on the SVG or bag of words is essentially a forced set of "known" vector transforms of the ontolgical past document contents, with new documents added and document vectors with incorporation of new words or now coincident word groups in relation to these "known" constructions. The resulting vector set of documents and weights are now available for insertion into the ontology in automated fashion, rather than human insertion. This lends an adaptive component to otherwise static ontological forms, and steadies the adaptive nature of the SVG-like approach, without denying the discovery of new combinations of words, weighting, vector discovery by document, etc. I haven't looked at this problem for several years at this point, and perhaps there already exists work in this area for adaptive growth of ontologies. I'd be interested in hearing about what is current.

Hi Phyllis,

Deletethanks for your contribute and sorry for being late in reply you.

As I wrote in linkedin (http://www.linkedin.com/groupAnswers?viewQuestionAndAnswers=&discussionID=96161011&gid=115439&commentID=70177464&trk=view_disc&ut=0bQ1DJfwQ1jR81) I found out some interesting works as for instance: http://www.cs.princeton.edu/~blei/papers/BleiNgJordan2003.pdf .

In this article has been showed a technique based on quite similar to your work called "Latent Dirichlet allocation".

One of the interesting thing of this paper is the test done on document classification using exactly the same data set I used so far.

Unfortunately the test has been done only on two categories (while our tests are based on 4 categories) and the results doesn't seem so brilliant.

Of course it doesn't mean that the technique doesn't work! As we know, doesn't exist a method that fit every kind of data set!

By the way, the approach could be more useful for indexing and entity extraction tasks.

cheers

cristian

very interesting, thanks

ReplyDeletehatay evden eve nakliyat

ReplyDeleteısparta evden eve nakliyat

erzincan evden eve nakliyat

muğla evden eve nakliyat

karaman evden eve nakliyat

TEOLD

B2D45

ReplyDeletePepecoin Coin Hangi Borsada

Bayburt Şehir İçi Nakliyat

Aptos Coin Hangi Borsada

Yozgat Lojistik

Hakkari Şehirler Arası Nakliyat

Aydın Evden Eve Nakliyat

Karapürçek Boya Ustası

Çerkezköy Çilingir

Hatay Parça Eşya Taşıma

2A2D5

ReplyDeleteBartın Evden Eve Nakliyat

Amasya Şehirler Arası Nakliyat

Hakkari Lojistik

Sincan Boya Ustası

Trabzon Evden Eve Nakliyat

Burdur Parça Eşya Taşıma

Ünye Boya Ustası

Cointiger Güvenilir mi

Amasya Lojistik

634E2

ReplyDeleteAdıyaman Parça Eşya Taşıma

Keçiören Fayans Ustası

Ünye Oto Boya

Yozgat Şehirler Arası Nakliyat

Mardin Parça Eşya Taşıma

Etimesgut Boya Ustası

Amasya Şehir İçi Nakliyat

Manisa Lojistik

Yobit Güvenilir mi

C1DC1

ReplyDeleteKayseri Evden Eve Nakliyat

Binance Referans Kodu

Sivas Evden Eve Nakliyat

Kucoin Güvenilir mi

Kırıkkale Evden Eve Nakliyat

Ünye Boya Ustası

Çerkezköy Organizasyon

Çankaya Parke Ustası

Eryaman Alkollü Mekanlar

9BC21

ReplyDeleteEtimesgut Parke Ustası

Keçiören Fayans Ustası

Uşak Evden Eve Nakliyat

Çerkezköy Yol Yardım

Maraş Evden Eve Nakliyat

Kars Evden Eve Nakliyat

Etimesgut Fayans Ustası

Çerkezköy Motor Ustası

Balıkesir Evden Eve Nakliyat

3C042

ReplyDeletebinance referans kimliği nedir

referans kimliği

lavanta sabunu

kantaron sabunu

canlı sohbet ucretsiz

bitexen

tarçın sabunu

okex

bitexen

7A776

ReplyDeletebinance

canlı sohbet ucretsiz

bitcoin ne zaman çıktı

bybit

poloniex

bitcoin nasıl oynanır

gate io

bitexen

bitcoin seans saatleri

CB796

ReplyDelete----

----

----

----

----

----

----

matadorbet

----