We have also noticed that this approach doesn't return better than the traditional bag of words.

I would now explore some derivation of this approach, taking advantage of the graph theory.

In the following step I'm gonna to show you the alternative strategy applied to a corpus for the category "GRAIN" (referred always to our official data set REUTERS 21578).

Co - occurrences

The first step is to extract the co occurrences as we already explained in the former posts.

The test has been performed considering just 100 documents, below the graph showing the score assigned to all (>5000) co occurrences extracted by the proc.

|

| Sorted score assigned to the co occurrences extracted from the GRAIN category (100 docs). |

In this step we build the graph of the co-occurrences of two words to obtain a representation of the links among the other co occurrences.

Here you are the graph obtained from this step:

|

| The Graph of the co-occurrences linkage for the GRAIN category |

The Kcore algorithm could help in doing that. It gives groups of vertices that are connected to at least k others.

To build it you can remove repeatedly vertices of out-degree less than k.

Below the k core components from the original graph (the k chosen here is five).

|

| k core components extracted from the original graph |

The entities extracted

Form the k-core graph we could extract the shortest path for each combination of two vertexes.

And we can compare the paths obtained with the document in the corpus to select the paths really existing in the documents (this trick of course should be applied only on the training set!).

The procedure returned 526 entities.

Here you are the longest entities extracted:

1 rice farmer reach acre

2 department say last summer

3 contract trade agricultural

4 month pct increase

5 summer grain harvest

6 board trade agricultural

7 record tonne previous

8 rice farmer reach

9 cash crop include

10 high rate national

11 pay crop loan

12 cotton payment reach

13 major farm group

14 future year ago

15 future trade area

16 total tonne intervention

17 offer exporter issue

18 import tonne agricultural

19 bushel price current

20 bushel corn producer

The graph clustering

Another interesting things we can do with such entities is to isolate in the kcore graph the nodes that describe the entities and apply a clustering algorithm over the graph connections.

I decided to apply in this case as a clustering distance the "community modularity".

The clustering returns unstructured features.

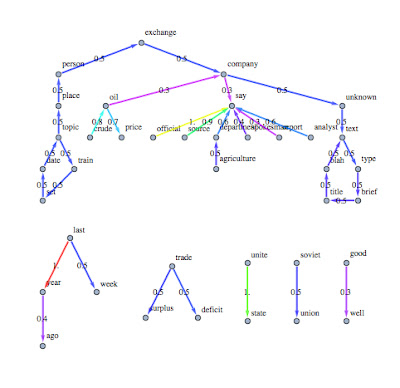

Here you are the clustered graph and the unstructured features clustered:

|

| The clustered k-core graph |

|

| The unstructured features clustered |

As you can see last step tends to group together words semantically related:

Consider for example the second cluster, it contains almost all words related to period of time topic as "winter, year, month, ago", the fourth represents political aspects, the fifth describes quite precisely economic aspects.

The third cluster is gathering aspects strictly related with the main topic "grain", while the first one seems group words not related with the topic.

Of course it's not 100% accurate, and is still open the question how to use this clusters.

In the next post I'm gonna extend this experiment to other categories, and I would like to check if the entities extracted can be used to improve the accuracy of our classifier.

...Stay tuned

cristian