As you know, I like write by myself the algorithms and I like show alternative paths, so I've decided to explain a powerful clustering algorithm based on the SVM.

To understand the theory behind of SVC (support vector clustering) I strongly recommend to have a look at: http://jmlr.csail.mit.edu/papers/volume2/horn01a/rev1/horn01ar1.pdf . In this paper you will find all technical details explained with extremely clearness.

As usual I leave the theory to the books and I jump into the pragmatism of the real world.

Consider the problem of a set of points having an ellipsoid distribution: we have seen in the past that K-means doesn't work in this scenario, and even trying different tweaks changing the position of the centroids and its number of centroids, the final result is always unacceptable.

SVC is a clustering algorithm that takes in input just two parameters (C and q) both of them real numbers. C is to manage the outliers and q is to manage the number of clusters. Be aware that q is not directly related with the number of clusters!! Tuning q you can manage the "cluster granularity" but you cannot decide a priori the number of clusters returned by the algo.

How to implement SVC.

There are many implementations of SVC, but I would like to show different tools (I love broaden the horizons...), so the ingredients of the daily recipe are: AMPL & SNOPT.

Both of them are commercial tools but to play with small set of points (no more than 300) you can use for free the student license!

AMPL is a comprehensive and powerful algebraic modeling language for linear and nonlinear optimization problems, in discrete or continuous variables and SNOPT is a software package for solving large-scale optimization problems (linear and nonlinear programs).

AMPL allows the user to write the convex problem associated to SVC's problem in easy way:

|

| The AMPL file for SVC |

In the former image, after the statement "param x: 1 2 3 :=" there are the list of 3D points belonging to our data set.

One of the characteristics of SVC is the vector notation: it allows to work with high dimensions without changes in the development of the algorithm.

2D Problem

Let's show the application of SVC in our ellipsoid data set

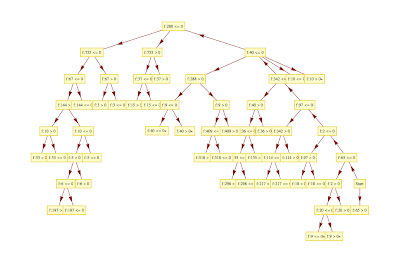

The above image shows the clusters (depicted like connected components of a graph...read further details in the mentioned paper) returned by SVC and plotted by Mathematica.

3D problem

Just to show the same algorithm working in 3D on the same problem:

And Here you are the SVC results plotted by Mathematica:

Let's show the application of SVC in our ellipsoid data set

|

| 300 pt having ellipsoid distribution. The first contour of SVC has been depicted in black. |

3D problem

Just to show the same algorithm working in 3D on the same problem:

|

| 3D points having ellipsoid distribution. |

|

| SVC applied on the former data set |

As you can see in both scenarios SVC is able to solve the easy problem that K means cannot manage.

PS

We will continue the text categorization in the next post... From time to time I allow to myself some divagation.