Finally, after many preambles we have all conditions to classify the documents. So far we have seen:

- How to translate a document in a vectors understandable by algorithms;

- How to analyze the data set with density analysis;

- How to reduce the dimension of the problem (features reduction)

Well, now we have the all ingredients to classify the documents.

To show a couple of approach, I have extracted 4 classes from our REUTERS data set (choosing the criteria “TOPICS”, and considering the easier condition of documents belonging only to one class): “GOLD”,“GRAIN”,”OIL”,”TRADE”.

In this stage I put my self in a “comfortable zone” so, I considered the easiest way to classify the documents, that is, I built the vectors considering just ONE “bag of words”.

Basically I extracted the features set from the entire training set without any distinction of the specific class of the document.

In this approach we are delegating the entire complexity of the task to the classifier!

As we can see below, be lazy never pay!! (…I hope that it makes sense in English… ).

Let’s move on the results.

As I always recommend, never play with one toy! Be always curios and try different approach to solve the problem.

In this spirit for the time being I have done some tests using SVM (in LIBSVM implementation) and C.5 (the free version).

I embedded in Wolfram Mathematica the scripts to run both LIBSVM and C5 (contact me at cristian.mesiano@gmail.com if you desire the source); in this way all tasks (data preparing, features extraction, vector building and so on) can be done using only one interface.

| Notice that the training set has been rescaled and weighted with different scores. |

|

| Accuracy For SVM Classifier: Best results with Linear Kernel |

As you can see, the accuracy measured on the training set is not brilliant! The OIL class is in the 50% of the case mislead. Moreover we have to consider that:

· we are working with an easy data set and with few classes.

· The accuracy has been measured only on the training set (…remember that the first error of the newbie is to focus much more on the training env.). The training set contains only points already observed by the classifier. And analysis done only on it can hide overfitting problems!!

Even trying with different tuning, and with different kernels, the accuracy doesn’t improve.

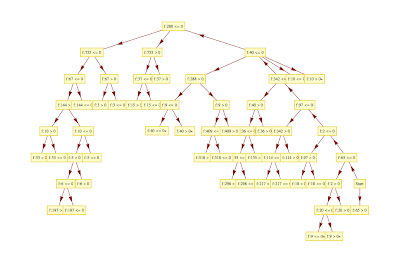

The situation doesn’t improve with C5: as you can see below, the accuracy is pretty much the same achieved via SVM.

|

| The Decision Tree returned by C5 (plotted with Mathematica). |

| |

|

So Do we give up?

NEVER.

In the next posts we will see how improve the accuracy introducing boosting techniques.

…There are thousands of details to consider and to explain like:

linear kernels for SVM, how to measure the accuracy, how to improve the training set and avoid the over fitting…

We will see that in the next posts.

Stay Tuned

Cristian

Well, I consider that any serious classification investigation should have a kind of plan were you include all the classification algorithms you think that might work on that kind of data Iunless you are working on a problem were it has already been showned that a certain algorithm is the best fit for it). On top of that you need to plan all the tests you are going to do with each algorithm, for example the parameter tunning which is extremelly complicated in SVM. In my experience you need to dedicate lots of time in testing many different kernels and each one needs a proper investigation of its parameter, (Using svmlib I found extremelly important for the outcome of the classifier the values of eps and gamma, but very hard to find, at least in my case searching those values with an algorithm such as gradicent descent did not work, it worked trying numbers that I calculated based on a paper I read).

ReplyDeleteThis is why the integrated frames of datamining are very usefull, you can design all the tihngs you are going to try, the order, meta selections etc.

Nevertheless I think it is much better when is possible to do serious statistical exploration of the data and understand the math and logic behind those algorithms and their parameters in order to reach a good solution. But I think the current tendency is not the deep understanding of them but just try try tyr with the aid of those programs that organize all the experiments for you.

Then you are completelly right about the next steps

Hi Mariana,

ReplyDeleteFirst of all thanks for your contribute.

Let me explain better my point of view, because I think there is a big misunderstanding: I'm totally with you when you say "any serious investigation should have a kid of plan"!!

indeeed I started the blog showing some basic pillar of the classification:

- linear classification and kernel mapping;

- data set preparing: tagging, statistical analysis, density analysis.

- feature extraction: different approaches

- feature reduction: showing for example for the PCA the limits of it, and a comparison with another algo.

To conclude in the former post I posted a Road map to classify our dataset.

Of course all topics are so huge and complex that in a blog is not possible to explore properly and exhaustively.

My wish is just to stimulate curiosity on that: there are hundreds of books, and paper to go into more depth!

About the SVM you mentioned: I know very well that the tuning, the choice of the kernel is not a task performable with the strategy "try and try!", but is out of the scope of this blog talk about the theoretical issues behind of that.

The same reasoning is valid for every single algo I mentioned so far: you have to study a lot the theory behind before to say: it fits with my need!

Sorry if your impression is that I'm just clicking a button to run a tool!

...Honestly I hate the pre built tool that organize for you everything.

...May be I'm too distrustful but I never used (with exception of C5) a prebuilt tool: i alwaya implemented everything by my self!

...Also the wolf dual for the SVM!

BTW, thanks again for your suggestions

Regards

c.

You mentioned before the kernel parameters in LIBSVM.

ReplyDeleteIn my experience I surprisingly noticed that the best kernel for the text categorization is the linear kernel!

And that the best strategy is to play around the weight of capacity factor for each class (here the simulated annealing and the gradient strategy work very good!).

What is not clear by my side is that using a different implementation (i'm talking just on different implementation and not different algorithm) this finding is not always true!

For example the same data set processed with an SVM based on AMPLE and SNOPT returns better results with a gaussian kernel!

Maybe due to the infinite Hilbert space in which the data are mapped, the gradient strategy is useless.

...However to mitigate the risk to spend plenty time on the tuning,as I'm going to show in the next posts, I prefer reduce the complexity of the problem (with a divide et impera strategy) and work with many boolean classifiers: In this way we have to tune more classifier but the every classifier has to solve easier problem! (basically a boosted approach like mentioned in the previous post).

I would be glad to share mutually your experience in this field: feel free to arrange a post and I'll publish it with pleasure.