I was working on the post related to the relation between IFS fractals and Analytical Probability density, when an IT manager contacted me asking an informal opinion about a tool for Neural Networks.

He told me: “we are evaluating two different tools to perform general purpose data mining tasks, and we are oriented toward the tool xxx because the neural network module seems more flexible”.

My first reaction was: “sorry, but you have to solve a specific problem (the problem is a little bit complex to explain but it is a classical inversion function problem) and you are looking for a generic suite: I’m sincerely confused!”

He justified himself telling “If I buy a generic suite I can reuse it in a next time for other problems!”: bloody managers! Always focused to economize 1 $ today to loose 10$ tomorrow :)

As you can imagine the discussion fired me, so I started to investigate about this “flexibility capabilities” (this is for me the key point to evaluate a solution) and I began long emails exchange with this enterprising manager.

Let me sum up the main questions/answers about this module.

Q: What kind of Neural Network does the suite support?

A: Back Propagation (just for multilayer perceptron) and SOM.

The suite has a nice GUI where you can combine the algorithms like lego-bricks. (it is very similar to WEKA’s gui).

Q: What kind of error function does it support (limited to BP nets)?

A: I don’t Know, but I suppose just the delta based on target vs output generated;

Q: What kind of pruning algorithms does it support?

A: It supports a weight-based algorithm, but there is no control on it.

Q: How many learning algorithms does the BP net support?

A: It supports just BP with momentum.

Q: What kind of monitors does it support to understand the net behavior?

A: It has a monitor to follow the output error on the training set.

Q: What is the cost of this suite?

A: The suite (actually it is pretty famous data mining suite ...don’t ask more about it…) price is around 10 thousand $ for limited number of users (it doesn’t embrace neither the support costs nor integration costs nor dedicate hardware).

Q: do you have the chance to visualize the error surface?

A: I’m a manager, what the hell does this question mean?

My recommendations: don’t buy it.

Here you are the key points:

1. No words, Bring real evidences!

As usual, instead of thousands words I prefer get direct and concrete proof about my opinion, because my motto is : “don’t say I can do it in this way or in this way, but just do it!” (…hoping that it makes sense in English!).

So during this weekend I decided to implement in Mathematica a custom tool to play with Back Propagation Neural Networks having at least the same features described above.

|

| The general-purpose Neural Net Application built with mathematica: different colors for neurons to depict different energy levels., monitor to follow the learning process, fully customization for energy cost function, activation functions, ... |

My “suite” (of course, it is not really useful for productive systems) has the following features:

- Graphical representation of the net;

- Neurons dynamically colored by energy importance (useful to implement a pruning algo);

- Dynamical cost function updating;

- Learning coefficient decay (really useful but seldom present in the common suites);

- Activation functions monitor;

- Automatic setting of input/output neurons layers in compliance with training set.

2. General-purpose algorithm doesn’t mean “every one can use it to solve whatever problem”!

There is a misbelief that a general-purpose algorithms like SVM, NN, Simulated annealing, decision trees and so on, can solve every kind of problem without human interactions, customization and expertise.

For the XOR problem, maybe also a newbie can find the right setting for a neural net, but in the real world the problem are so complex that the overall accuracy is a combination of many factors, and sometimes the algorithm choice has a limited influence on the entire picture!

Let's start to show a sample toy the XOR problem (as you know is a classical example brought to explain problems without linear solutions).

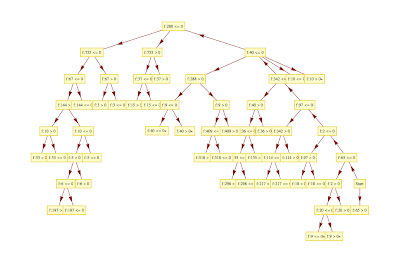

I instantiated a network having 1 hidden layer and 2 hidden neurons pro layer. I fixed the Beta param for the activation function to 1 and eta learning equal to .1 and i Randomized the weights in the range [-1,1] (as reported in the main tutorials ).

here you are the network able to solve the problem and the related behavior for the cost function:

|

| Neural Network for XOR problem: 2 input neurons , 2 hidden neurons, threshold neuron and the output. |

|

| The Square Error decreasing for the above network. As you can see a stable configuration is achieved after 30000 learning cycles!! |

Notice that with the standard configuration, the problem takes around 30000 cycles to be solved!!!

With a little bit of experience, the same results can be achieved with less than 300 cycles. Indeed, changing the eta learning, and the network configuration, we obtain:

|

| Another configuration to solve the XOR problem: 1 hidden layer and 7 hidden neurons: as you can see after 300 cycles the learning the error is negligible. |

Observe the hidden neurons energy bar: as expected only one hidden neuron (H1,2) gives a concrete contribute to the network (this is compliance with the theory that only one hidden neuron is required to solve the XOR problem!!).

A novel data miner could say: "It is easy: I increase the number of hidden layers and the numbers of neurons and the training will be faster!": TOTALLY FALSE!!

Indeed, (especially for the neural network based on the classical Werbos delta rule) the complexity of the net, often obstacles the learning producing oscillatory behaviors due to neuron saturation and overfitting.

|

| A Neural network composed by 2 hidden layers and 8 hidden neurons trained to solve the XOR problem. As you can see even if the network is more complex than the former example, it is not able to solve the problem after 1000 cycles (the former net solved the problem after 300 cycles). |

We have seen that just with a trivial problem there are many variables to consider to obtain a good solution. In the real world, the problems are much complex and the expectation that a standard BP can fit your needs is actually naive!

3. Benefits & thoughts.

How much time does take an implementation built in house?

I spent more or less 6 hours to replicate the standard BP, with the "neurons colored version"... and in this slot of time, my 4 years old daughter tried to "help" me to find the best color range and the most fancy GUI :) typing randomly the keys of my laptop....

- The effort to replicate the model in a faster language (like java) could take 4 times the effort to code this in Mathematica: that is no more then 1 week development.

- The customization of the standard BP for the specific problem, for example changing the objective function, could be really expensive and often not feasible for a standardize suite.

- The integration of the bought solution takes almost always at least 50% of the development efforts, so build in house can mitigate these costs because your solution is developed directly on your infrastructure and not adapted to work with it!

- The advantage to develop in house a product requiring high competences lays in the deep knowledge that the company acquires, and the deep control of the application under every different aspect: integration, HW requirements, business requirements and so on. The knowledge is the key to win the competitive challenges in these strange world. Of course, train in house team having deep scientific knowledge oriented to the "problem solving" is initially expensive and risky, but in my belief the attempt to delegate this core "components" outsourcing totally these expertise to external companies will produce an impoverishment of the value of a company.

The BP simulator I implemented is totally free for students, and/or scholastic institutions: contact me

at: cristian.mesiano@gmail.com.

...We will use this simulator to highlight interesting points in the amazing world of data mining.

Stay Tuned,

Cristian